A prominent European security firm specializing in remote monitoring and facility protection. Their operations span more than 190 international locations, including high-security logistics centers and corporate hubs. Their centralized Security Operations Center (SOC) manages a massive infrastructure of 20,000+ camera streams around the clock.

As their site portfolio expanded, the client faced a critical "scalability wall" where hiring more staff was no longer a viable solution. Key pain points included:

The client needed a custom Computer Vision (CV) ecosystem that could:

With Intelligent Edge-to-Cloud Integration

We developed a multi-tenant computer vision platform to filter out noise and process video data directly on-site (at the edge). This system was designed to achieve near-zero latency and to continue functioning during network fluctuations.

WORKFLOW

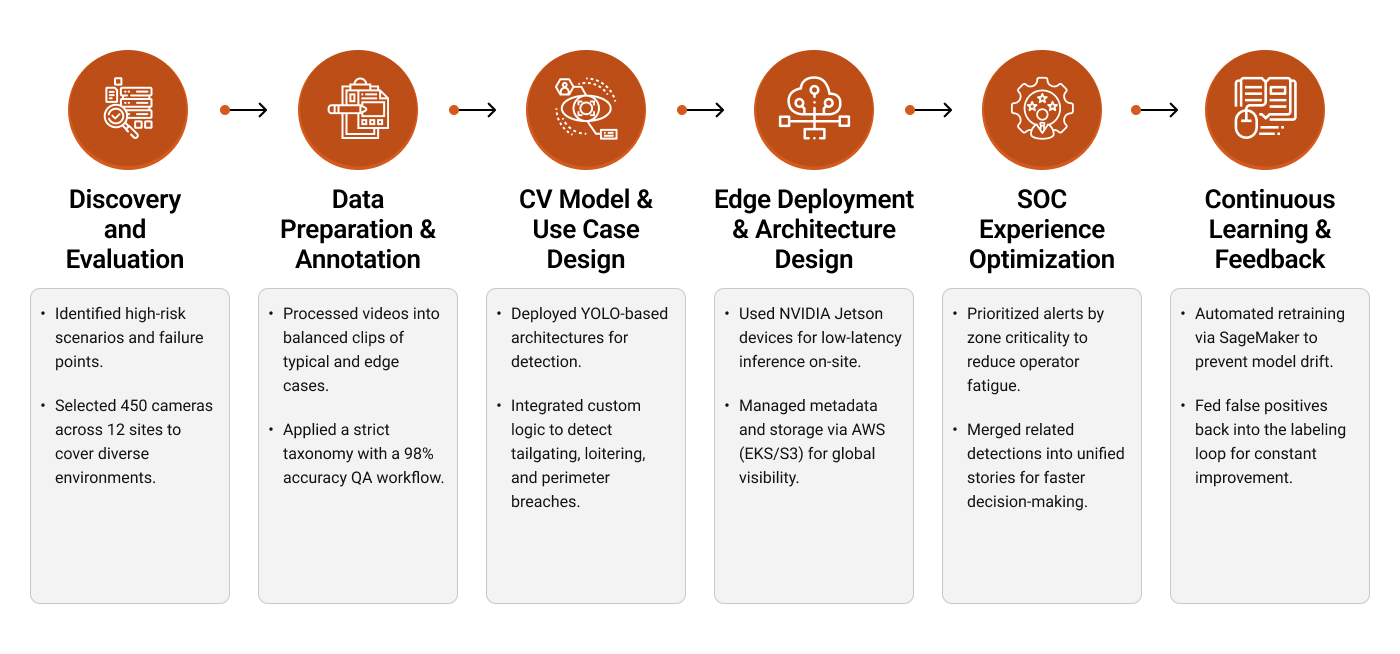

To help the client move from a manual surveillance setup to an automated AI-driven CV system, we followed a structured six-step implementation process:

We began with a structured 4-week discovery sprint to align the CV solution with real incident patterns and operator needs.

The client had petabytes of archived video, but it was not labeled in a way that supported reliable model training. We set up a scalable data pipeline focused on quality, coverage, and repeatability.

Using the curated labels, we implemented a modular computer vision model stack, trained and tuned per use case, so performance stayed consistent across site layouts.

To keep latency low and avoid streaming raw video to the cloud, we processed footage on site and sent only event data upstream.

All events and settings were made available through secure APIs and role-based dashboards for SOC teams and admins.

The goal was not just detection accuracy, but faster, clearer decisions for security teams.

To keep performance stable at scale, we operationalized the full lifecycle from monitoring to retraining and controlled rollouts.

3x camera coverage per operator without added headcount

~50% reduction in false positive alerts

~55% faster analytics setup for new facilities and camera layouts

~75% lower upstream bandwidth usage